Tilt Matching for Scalable Sampling and Fine-Tuning Jan 2026

With Peter Potaptchik, and Michael Albergo

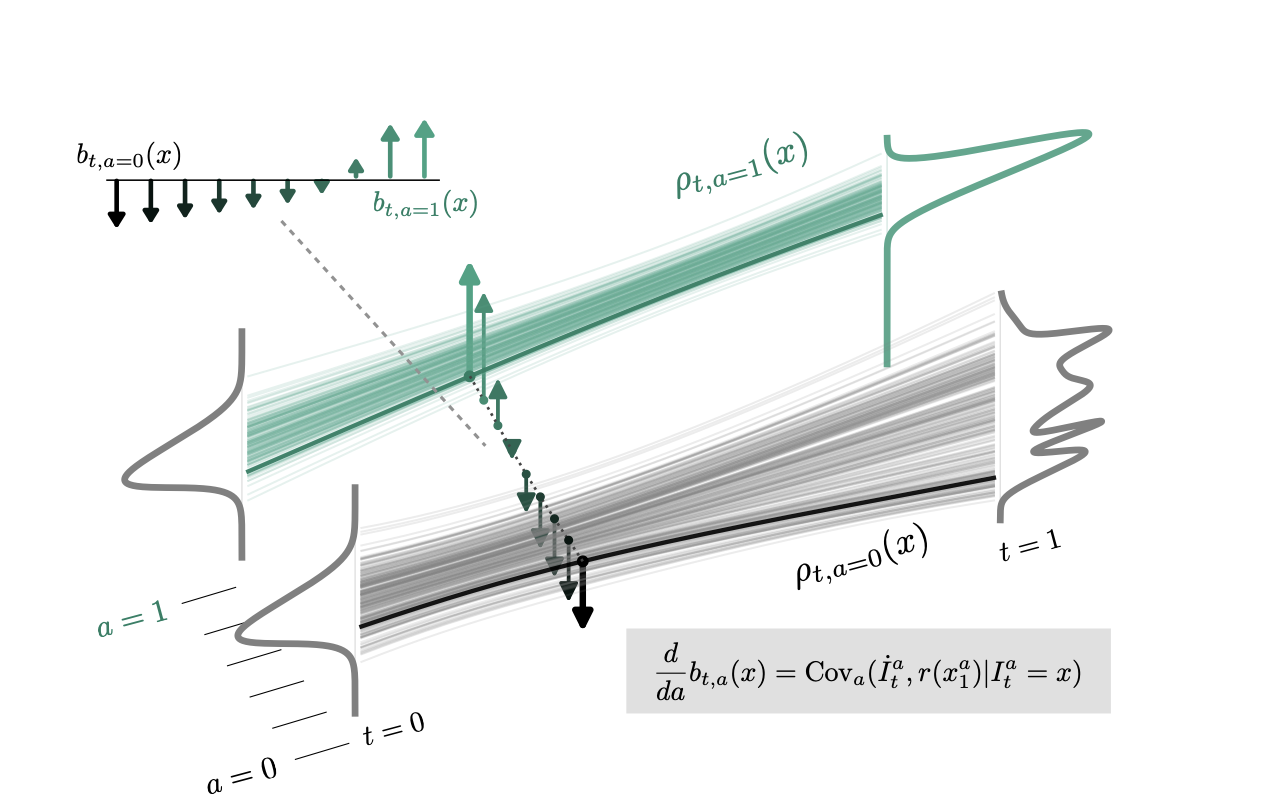

We propose a simple, scalable algorithm for using stochastic interpolants to sample from unnormalized densities and for fine-tuning generative models. The approach, Tilt Matching, arises from a dynamical equation relating the flow matching velocity to one targeting the same distribution tilted by a reward, implicitly solving a stochastic optimal control problem.

The new velocity inherits the regularity of stochastic interpolant transports while also being the minimizer of an objective with strictly lower variance than flow matching itself. The update to the velocity field can be interpreted as the sum of all joint cumulants of the stochastic interpolant and copies of the reward, and to first order is their covariance.

The algorithms do not require any access to gradients of the reward or backpropagating through trajectories of the flow or diffusion. We empirically verify that the approach is efficient and highly scalable, providing state-of-the-art results on sampling under Lennard-Jones potentials and is competitive on fine-tuning Stable Diffusion, without requiring reward multipliers. It can also be straightforwardly applied to tilting few-step flow map models.

The theoretical groundwork of this algorithm is mostly developed by Peter and Michael, please reach out to them for any question.

Any-Order Flexible Length Masked Diffusion Aug 2025

With Jaeyeon Kim, Carles Domingo-Enrich, Sham Kakade, Yilun Du, Timothy Ngotiaoco, Sitan Chen, and Michael Albergo

We introduce FlexMDM, a class of masked diffusion models for variable-length data based on joint interpolant, an extension of the stochastic interpolant framework.

We further show that FlexMDM retains the any-order sampling guarantees of masked diffusion as established in prior work by Jaeyeon Kim and Kulin Shah.

With wonderful co-author Jaeyeon Kim, we demonstrate that the method scales to 8B parameters and achieves notable performance improvements over previous masked diffusion models.

Also see concurrent work EditFlow.

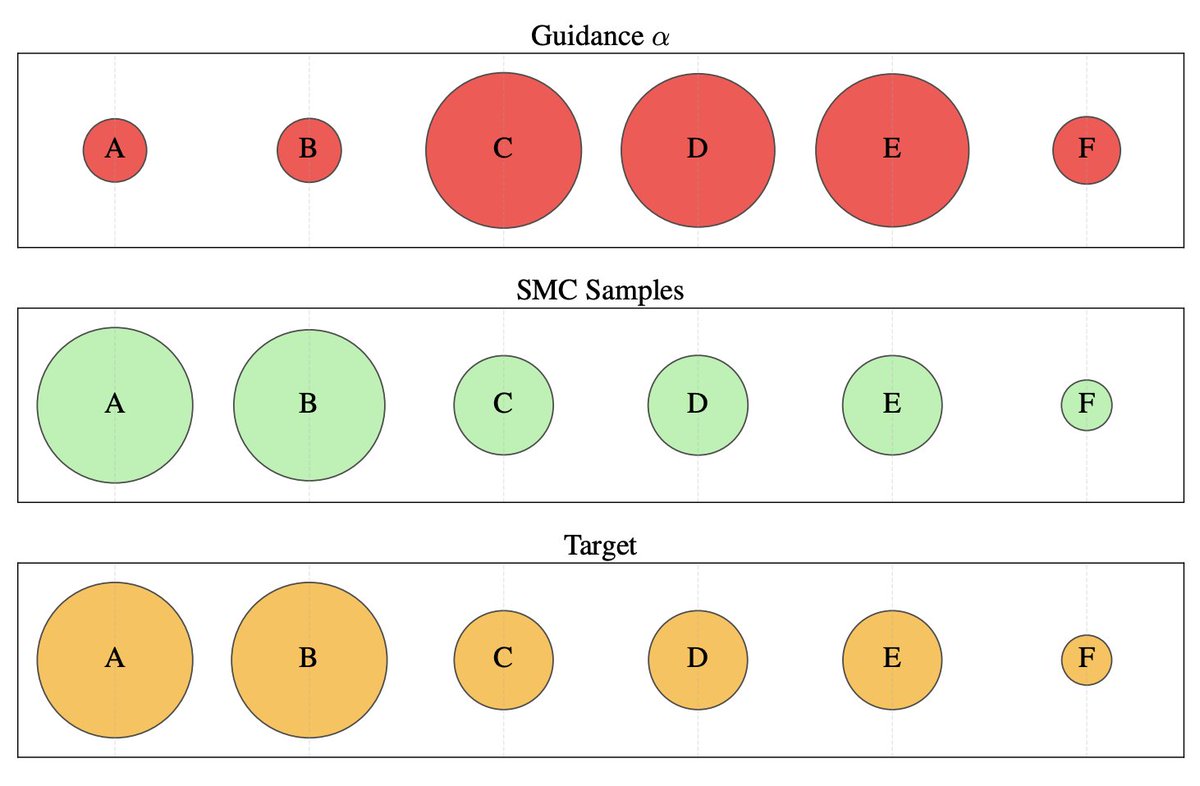

Debiasing Guidance with Sequential Monte Carlo Jan 2025

With Paul Jeha, Jes Frellsen, Michael Albergo, Pietro Lio, and Francisco Vargas

Guidance in diffusion models are biased. By using a specific choice of intermediate distribution, this bias can be corrected with Sequential Monte Carlo without extra function evaluations.

Also see concurrent work Feynman-Kac Correctors for a similar scheme, and follow-up work Radon-Nikodym Estimators for a more systematic approach to derive Sequential Monte Carlo schemes for general targets.